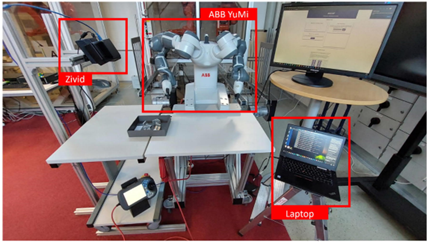

Design and Implementation of a Multimodal System for Human-Robot Interactions in Bin-Picking Operations

The main goal of this Thesis was to design and implement a system and a process, that creates a new use for collaborative robot cell and teaches the system to be able to do Bin-Picking operations automatically with unknown parts. Furthermore, the proposed solution will fit to the so-called “Smart Factory”, which is considered a fundamental concept of Industry 4.0. The solution consists of four major components, Orchestrator Application, Robot Controlling Application, 3D-module (Zivid One+ 3D camera) that finds grasp poses for unknown parts and M2O2P that reads hand gestures from smart glove sensor input.

Emotion-Driven and Control of Human-Robot Interactions

The image presents an approach of a brain-computer interface (BCI) for adapting cobot parameters to the emotional state of a human worker. This approach uses electroencephalography (EEG) to digitise and understand the human’s emotional states. Afterwards, the parameters of the cobot are instantly adjusted to keep the human’s emotional states in a desirable range, increasing the confidence and trust between the human and the cobot. The human and the cobot are working collaboratively in the assembly of an item, with the roles of both being indispensable for fulfilling the task. This methodology is under the scope of Human-AI interactions, giving a solution to the communication of humans and AI solutions by sharing and receiving information between them to create more comfortable and fluid interactions.

During the performance of the task, the human is using an Epoc+ headset for recording EEG signals, and the cobot on the scene is the IRB14000 from ABB Robotics, YuMi.

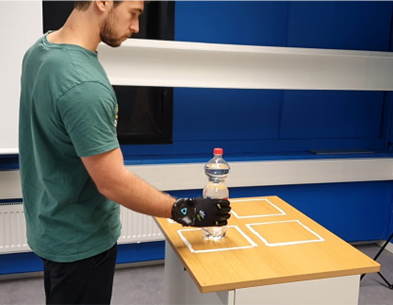

Motion-level Programming by Demonstration System

Programming by Demonstration (PbD) systems provide an intuitive and easy-to-use way for non-programmers to teach robots how to perform different tasks. A motion-level PbD system has been developed, where the user demonstrates the trajectory that the robot must follow using a Leap Motion Controller to track their hand position, while the other hand is used to give additional commands to the robot using hand gestures captured by a CaptoGlove dataglove. This motion-level system provides a solution for users to teach the robot how to perform tasks without any prior programming experience.

Task-level Programming by Demonstration System

A task-level PbD system has been developed for users to teach the robot how to perform complex manipulation tasks. In this system, the user’s demonstration data is recorded by a CaptoGlove dataglove, which captures the fingers’ motion and pressure, and an HTC VIVE Tracker, which captures the hand position and orientation. The operations performed by the user during a manipulation task are segmented and recognized using Multi-order Multivariate Markov Models, which have to be trained previously. The system generates an action plan that the robot can later execute, obtaining the most likely sequence of operations performed by the user, as well as the locations where these were executed. This task-level system provides a powerful solution for users to teach the robot how to perform complex tasks in a natural and intuitive way.