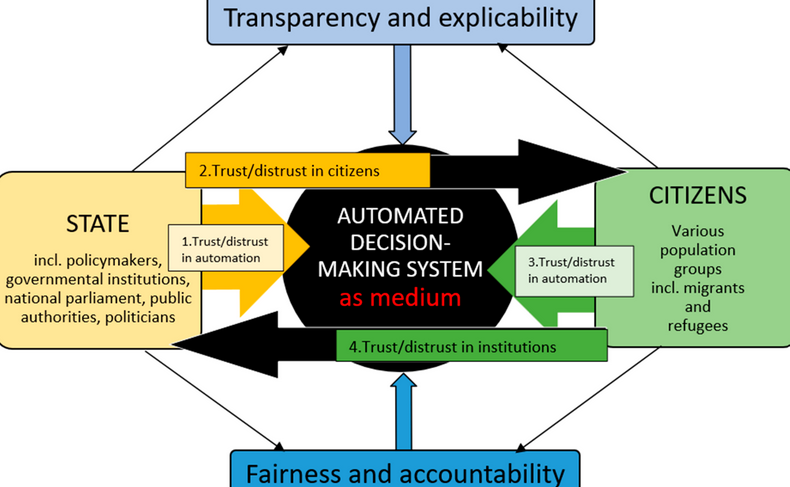

In this approach, institutional trust does not simply mean public trust in institutions (though it is an important component of democratic societies); instead, it refers to the responsive interactions between governmental institutions and citizens. Currently, very little is known about policymakers’ trust or distrust in automated systems and how their trust or distrust in citizens is reflected in their interest in implementing these systems in public administration. By analysing a sample of recent studies on automated decision-making, they explored the potential of the institutional trust model to identify how the four dimensions of trust can be used to explore the responsive relationship between citizens and the state. This article contributes to the formulation of research questions on automated decision-making in the future, underlining that the impact of automated systems on the socio-economic rights of marginalised citizens in public services and the policymakers’ motivations to deploy automated systems have been overlooked.

Read more: Parviainen, J., Koski, A., Eilola, L., Palukka, H., Alanen, P. & Lindholm, C. (2025) Building and eroding the citizen-state relationship in the era of automated decision-making: Towards a new conceptual model of institutional trust. Social Sciences 14(3), 178 https://doi.org/10.3390/socsci14030178