Projects

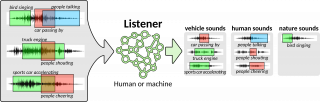

Teaching Machines to Listen

Funded by Academy of Finland, 2020-2025

This project will investigate methods for sound event detection and classification based on noisy data produced by crowdsourcing of annotations, to simulate the way humans learn about their acoustic environment. We work with methods from natural language processing, computational linguistics, and deep learning. We aim to advance state-of-the art by providing novel approaches for learning from noisy data in an efficient way.

Continual learning of sounds with deep neural networks

Funded by Jane and Aatos Erkko Foundation, 5.2024-4.2027

This project investigates continual learning in acoustic environments, by incrementally introducing new information to be learned. The notion of continual learning is used to indicate learning of information in successive bursts, where input data is used to extend the existing model’s knowledge by further training it. The most important aspect of incremental learning is to learn or adapt the model to the new data without forgetting the existing knowledge. When learning about acoustic environments, there are multiple ways such incremental tasks can be expressed, depending on the information available for learning. This work aims to open a few directions of research for incremental learning of audio.

Guided audio captioning for complex acoustic environments (GUIDE)

Funded by Jane and Aatos Erkko Foundation, 2022-2023

This project will investigate audio captioning as textual description of the most important sounds in the scene, guided towards finding the most important content while ignoring other sounds, similar to humans. We are interested to understand to what extent automatically produced audio captioning is suitable for subtitling for deaf and hard-of-hearing, if the manual work required for the descriptions of the non-speech soundtrack can be replaced by automatic methods, similar to the dialogue being translated into subtitles using automatic speech recognition.

This work is a collaboration with Assoc. Prof. Maija Hirvonen (Translation Studies, Linguistics, Tampere University).

Audio-visual scene analysis

Postgraduate research, funded by Tampere University

Shanshan is working on analysis of multimodal information for scene analysis.

The way humans understand the world is not only based on what we hear, but involves all senses. Inspired by this, multi-modal analysis, especially audio-visual analysis, gained increasing popularity in machine learning. Our first study on this topic showed that joint modeling of audio and visual modalities brings significant improvement compared to the individual models for scene classification.

Spatial analysis of acoustic scenes

Postgraduate research

Daniel is investigating methods for utilizing spatial audio in computational acoustic scene analysis.

The project aims at exploiting spatial cues derived from binaural recordings and microphone arrays to provide complex descriptions of acoustic environments. The planned work comprises joint use of several audio tasks, including sound event detection, sound source localization and acoustic scene classification.