Title: Learning in the Dark: Privacy-Preserving Machine Learning using Function Approximation

Authors: Tanveer Khan and Antonis Michalas

Research Artifact: https://gitlab.com/nisec/blind_faith

Venue: Proceedings of the 22nd IEEE International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom’23), 01—03 November 2023, Exeter, U.K.

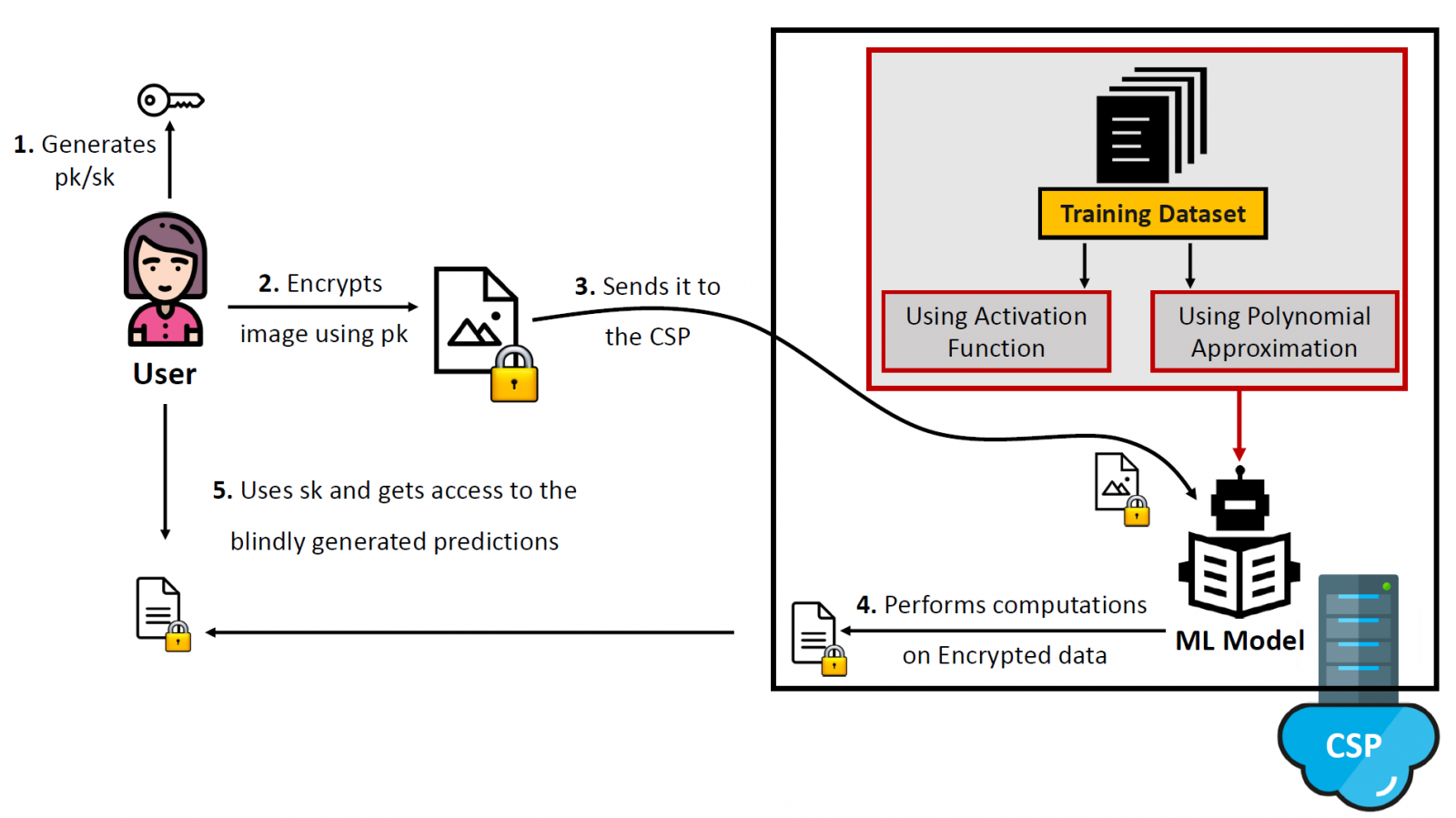

Abstract: Over the past few years, a tremendous growth of machine learning was brought about by a significant increase in adoption and implementation of cloud-based services. As a result, various solutions have been proposed in which the machine learning models run on a remote cloud provider and not locally on a user’s machine. However, when such a model is deployed on an untrusted cloud provider, it is of vital importance that the users’ privacy is preserved. To this end, we propose Learning in the Dark — a hybrid machine learning model in which the training phase occurs in plaintext data, but the classification of the users’ inputs is performed directly on homomorphically encrypted ciphertexts. To make our construction compatible with homomorphic encryption, we approximate the ReLU and Sigmoid activation functions using low-degree Chebyshev polynomials. This allowed us to build Learning in the Dark — a privacy-preserving machine learning model that can classify encrypted images with high accuracy. Learning in the Dark preserves users’ privacy since it is capable of performing high accuracy predictions by performing computations directly on encrypted data. In addition to that, the output of Learning in the Dark is generated in a blind and therefore privacy-preserving way by utilizing the properties of homomorphic encryption.