Publications

Selected publications from the past years

Visual Interaction Research Group

Selected publications from the past years

Rantala, J., Majaranta, P., Kangas, J., Isokoski, P., Akkil, D., Špakov, O., and Raisamo, R. (2020) Gaze Interaction With Vibrotactile Feedback: Review and Design Guidelines, Human–Computer Interaction, 35:1, 1-39. DOI: 10.1080/07370024.2017.1306444

Rantala, J., Majaranta, P., Kangas, J., Isokoski, P., Akkil, D., Špakov, O., and Raisamo, R. (2020) Gaze Interaction With Vibrotactile Feedback: Review and Design Guidelines, Human–Computer Interaction, 35:1, 1-39. DOI: 10.1080/07370024.2017.1306444

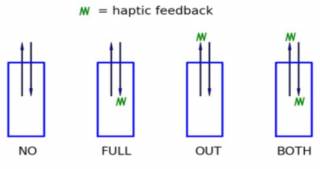

Vibrotactile feedback is widely used in mobile devices because it provides a discreet and private feedback channel. Gaze-based interaction, on the other hand, is useful in various applications due to its unique capability to convey the focus of interest. Gaze input is naturally available as people typically look at things they operate, but feedback from eye movements is primarily visual. Gaze interaction and the use of vibrotactile feedback have been two parallel fields of human–computer interaction research with a limited connection. Our aim was to build this connection by studying the temporal and spatial mechanisms of supporting gaze input with vibrotactile feedback. The results of a series of experiments showed that the temporal distance between a gaze event and vibrotactile feedback should be less than 250 ms to ensure that the input and output are perceived as connected. The effectiveness of vibrotactile feedback was largely independent of the spatial body location of vibrotactile actuators. In comparison to other modalities, vibrotactile feedback performed equally to auditory and visual feedback. Vibrotactile feedback can be especially beneficial when other modalities are unavailable or difficult to perceive. Based on the findings, we present design guidelines for supporting gaze interaction with vibrotactile feedback.

Majaranta P., Räihä KJ., Hyrskykari A., Špakov O. (2019) Eye Movements and Human-Computer Interaction. In: Klein C., Ettinger U. (eds) Eye Movement Research. Studies in Neuroscience, Psychology and Behavioral Economics, 971-1015, Springer, Cham. DOI: 10.1007/978-3-030-20085-5_23

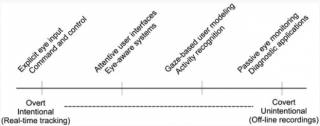

Gaze provides an attractive input channel for human-computer interaction because of its capability to convey the focus of interest. Gaze input allows people with severe disabilities to communicate with eyes alone. The advances in eye tracking technology and its reduced cost make it an increasingly interesting option to be added to the conventional modalities in every day applications. For example, gaze-aware games can enhance the gaming experience by providing timely effects at the right location, knowing exactly where the player is focusing at each moment. However, using gaze both as a viewing organ as well as a control method poses some challenges. In this chapter, we will give an introduction to using gaze as an input method. We will show how to use gaze as an explicit control method and how to exploit it subtly in the background as an additional information channel. We will summarize research on the application of different types of eye movements in interaction and present research-based design guidelines for coping with typical challenges. We will also discuss the role of gaze in multimodal, pervasive and mobile interfaces and contemplate with ideas for future developments.

Siirtola, H., Špakov, O., Istance, H., and Räihä, K.-J. (2019). Shared gaze in collaborative visual search. International Journal of Human-Computer Interaction. 35:18, 1693-1705. DOI: 10.1080/10447318.2019.1565746

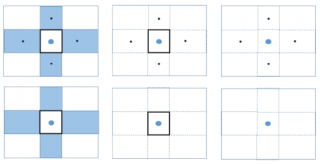

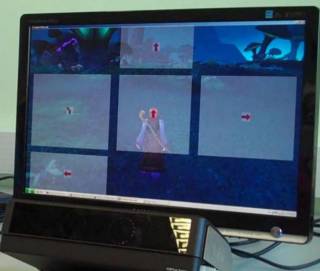

Collaboration improves efficiency, avoids duplication of efforts, improves goal-awareness, and makes working generally more pleasurable. While collaboration is desirable, it introduces additional costs because of the required coordination. In this article, we study how visual search is affected by gaze-sharing collaboration. There is evidence that pairs of visual searchers using gaze-only sharing are more efficient than single searchers. We extend this result by investigating if groups of three searchers are more efficient, and if and how the groups of searchers develop their search strategy. We conducted an experiment to understand how the collaboration develops when groups of one to three participants perform a visual search task by collaborating with shared gaze. The task was to state if the target was present among distractors. Our results show that users are able to develop an efficient search and division-of-labor strategy when the only collaboration method is gaze-sharing.

Špakov, O., Istance, H., Hyrskykari, A., Siirtola, H., and Räihä, K.-J. (2018). Improving the performance of eye trackers with limited spatial accuracy and low sampling rates for reading analysis by heuristic fixation-to-word mapping. Behavior Research Methods, 51, 2661–2687. DOI: 10.3758/s13428-018-1120-x

The recent growth in low-cost eye-tracking systems makes it feasible to incorporate real-time measurement and analysis of eye position data into activities such as learning to read. It also enables field studies of reading behavior in the classroom and other learning environments. We present a study of the data quality provided by two remote eye trackers, one being a low-sampling-rate, low-cost system. Then we present two algorithms for mapping fixations derived from the data to the words being read. One is for immediate (or real-time) mapping of fixations to words and the other for deferred (or post hoc) mapping. Following this, an evaluation study is reported. Both studies were carried out in the classroom of a Finnish elementary school with students who were second graders. This study shows very high success rates in automatically mapping fixations to the lines of text being read when the mapping is deferred. The success rates for immediate mapping are comparable with those obtained in earlier studies, although here the data is collected some 10 min after initial calibration of low-sample (30 Hz) remote eye trackers, rather than a laboratory setting using high-sampling-rate trackers. The results provide a solid basis for developing systems for use in classrooms and other learning environments that can provide immediate automatic support with reading, and share data between a group of learners and the teacher of that group. This makes possible new approaches to the learning of reading and comprehension skills.

Kangas, J., Rantala, J., Akkil, D., Isokoski, P., Majaranta, P., and Raisamo, R. (2017) Vibrotactile Stimulation of the Head Enables Faster Gaze Gestures. International Journal of Human-Computer Studies, 98, 62-71. DOI: 10.1016/j.ijhcs.2016.10.004

Gaze gestures are a promising input technology for wearable devices especially in the smart glasses form factor because gaze gesturing is unobtrusive and leaves the hands free for other tasks. We were interested in how gaze gestures can be enhanced with vibrotactile feedback. We studied the effects of haptic feedback on the head and haptic prompting on the speed of completing gaze gestures. The vibrotactile stimulation was given to the skin of the head through actuators in a sun glass frame. The haptic feedback enabled about 10% faster gaze gestures with more consistent completion times. Longer duration of haptic prompts tended to result in longer duration of gestures. However, the magnitude of the increase was marginal. Our results can inform the design of efficient gaze gesture user interfaces and recognition algorithms.

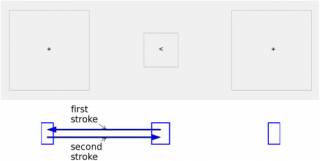

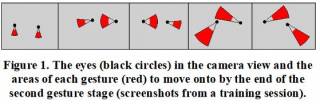

Istance, H. and Hyrskykari, A. (2017). Supporting making fixations and the effect on gaze gesture performance. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), ACM, pp. 3022–3033. DOI: 10.1145/3025453.3025920

Gaze gestures are deliberate patterns of eye movements that can be used to invoke commands. These are less reliant on accurate measurement and calibration than other gaze-based interaction techniques. These may be used with wearable displays fitted with eye tracking capability, or as part of an assistive technology. The visual stimuli in the information on the display that can act as fixation targets may or may not be sparse and will vary over time. The paper describes an experiment to investigate how the amount of information provided on a display to assist making fixations affects gaze gesture performance. The impact of providing visualization guides and small fixation targets on the time to complete gestures and error rates is presented. The number and durations of fixations made during gesture completion is used to explain differences in performance as a result of practice and direction of eye movement.

Akkil, D. and Isokoski, P. (2016) Gaze Augmentation in Egocentric Video Improves Awareness of Intention. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16), ACM, pp. 1573-1584. DOI: 10.1145/2858036.2858127

Akkil, D. and Isokoski, P. (2016) Gaze Augmentation in Egocentric Video Improves Awareness of Intention. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16), ACM, pp. 1573-1584. DOI: 10.1145/2858036.2858127

Video communication using head-mounted cameras could be useful to mediate shared activities and support collaboration. Growing popularity of wearable gaze trackers presents an opportunity to add gaze information on the egocentric video. We hypothesized three potential benefits of gaze-augmented egocentric video to support collaborative scenarios: support deictic referencing, enable grounding in communication, and enable better awareness of the collaborator’s intentions. Previous research on using egocentric videos for real-world collaborative tasks has failed to show clear benefits of gaze point visualization. We designed a study, deconstructing a collaborative car navigation scenario, to specifically target the value of gaze-augmented video for intention prediction. Our results show that viewers of gaze-augmented video could predict the direction taken by a driver at a four-way intersection more accurately and more confidently than a viewer of the same video without the superimposed gaze point. Our study demonstrates that gaze augmentation can be useful and encourages further study in real-world collaborative scenarios.

Akkil, D., Kangas, J., Rantala, J., Isokoski, P., Spakov, O., Raisamo, R. (2015) Glance Awareness and Gaze Interaction in Smartwatches. In Proceedings of the Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (EA-CHI’15), ACM, pp. 1271-1276. DOI: 10.1145/2702613.2732816

Akkil, D., Kangas, J., Rantala, J., Isokoski, P., Spakov, O., Raisamo, R. (2015) Glance Awareness and Gaze Interaction in Smartwatches. In Proceedings of the Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems (EA-CHI’15), ACM, pp. 1271-1276. DOI: 10.1145/2702613.2732816

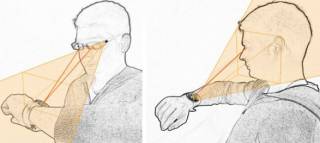

Smartwatches are widely available and increasingly adopted by consumers. The most common way of interacting with smartwatches is either touching a screen or pressing buttons on the sides. However, such techniques require using both hands. We propose glance awareness and active gaze interaction as alternative techniques to interact with smartwatches. We will describe an experiment conducted to understand the user preferences for visual and haptic feedback on a “glance” at the wristwatch. Following the glance, the users interacted with the watch using gaze gestures. Our results showed that user preferences differed depending on the complexity of the interaction. No clear preference emerged for complex interaction. For simple interaction, haptics was the preferred glance feedback modality. Copyright is held by the author/owner(s).

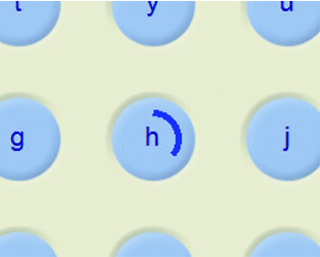

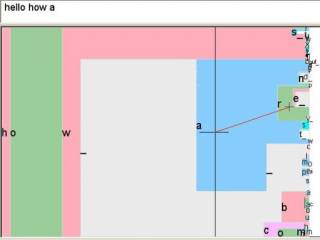

Räihä, K-J. (2015) Life in the Fast Lane: Effect of Language and Calibration Accuracy on the Speed of Text Entry by Gaze. In Abascal, J., Barbosa, S., Fetter, M., Gross, T., Palanque, P., Winckler, M. (eds.) Human-Computer Interaction – INTERACT 2015 : 15th IFIP TC 13 International Conference, Part I. Cham: Springer, pp. 402-417. DOI: 10.1007/978-3-319-22701-6_30

Räihä, K-J. (2015) Life in the Fast Lane: Effect of Language and Calibration Accuracy on the Speed of Text Entry by Gaze. In Abascal, J., Barbosa, S., Fetter, M., Gross, T., Palanque, P., Winckler, M. (eds.) Human-Computer Interaction – INTERACT 2015 : 15th IFIP TC 13 International Conference, Part I. Cham: Springer, pp. 402-417. DOI: 10.1007/978-3-319-22701-6_30

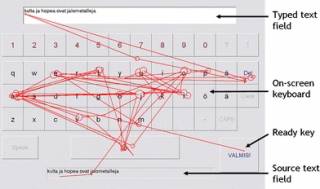

Numerous techniques have been developed for text entry by gaze, and similarly, a number of evaluations have been carried out to determine the efficiency of the solutions. However, the results of the published experiments are inconclusive, and it is unclear what causes the difference in their findings. Here we look particularly at the effect of the language used in the experiment. A study where participants entered text both in English and in Finnish does not show an effect of language structure: the entry rates were reasonably close to each other. The role of other explaining factors, such as calibration accuracy and experimental procedure, are discussed.

Majaranta, P., Bulling, A. (2014) Eye Tracking and Eye-Based Human–Computer Interaction. In: Fairclough S., Gilleade K. (eds) Advances in Physiological Computing. Human–Computer Interaction Series. Springer. DOI: 10.1007/978-1-4471-6392-3_3

Majaranta, P., Bulling, A. (2014) Eye Tracking and Eye-Based Human–Computer Interaction. In: Fairclough S., Gilleade K. (eds) Advances in Physiological Computing. Human–Computer Interaction Series. Springer. DOI: 10.1007/978-1-4471-6392-3_3

Eye tracking has a long history in medical and psychological research as a tool for recording and studying human visual behavior. Real-time gaze-based text entry can also be a powerful means of communication and control for people with physical disabilities. Following recent technological advances and the advent of affordable eye trackers, there is a growing interest in pervasive attention-aware systems and interfaces that have the potential to revolutionize mainstream human-technology interaction. In this chapter, we provide an introduction to the state-of-the art in eye tracking technology and gaze estimation. We discuss challenges involved in using a perceptual organ, the eye, as an input modality. Examples of real life applications are reviewed, together with design solutions derived from research results. We also discuss how to match the user requirements and key features of different eye tracking systems to find the best system for each task and application.

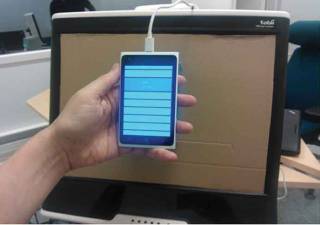

Kangas, J., Akkil, D., Rantala, J., Isokoski, P., Majaranta, P. and Raisamo, R. (2014). Gaze gestures and haptic feedback in mobile devices. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI’14), ACM, pp. 435-438. DOI: 10.1145/2556288.2557040

Anticipating the emergence of gaze tracking capable mobile devices, we are investigating the use of gaze as an input modality in handheld mobile devices. We conducted a study of combining gaze gestures with vibrotactile feedback. Gaze gestures were used as an input method in a mobile device and vibrotactile feedback as a new alternative way to give confirmation of interaction events. Our results show that vibrotactile feedback significantly improved the use of gaze gestures. The tasks were completed faster and rated easier and more comfortable when vibrotactile feedback was provided.

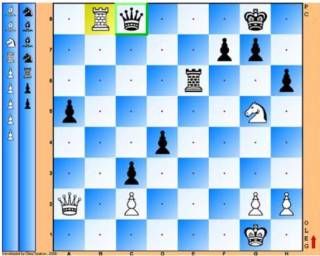

Vickers, S., Istance, H., and Hyrskykari, A. (2013) Performing Locomotion Tasks in Immersive Computer Games with an Adapted Eye-Tracking Interface. ACM Trans. Access. Comput. 5, 1, Article 2 (September 2013), 33 pages. DOI: 10.1145/2514856

Vickers, S., Istance, H., and Hyrskykari, A. (2013) Performing Locomotion Tasks in Immersive Computer Games with an Adapted Eye-Tracking Interface. ACM Trans. Access. Comput. 5, 1, Article 2 (September 2013), 33 pages. DOI: 10.1145/2514856

Young people with severe physical disabilities may benefit greatly from participating in immersive computer games. In-game tasks can be fun, engaging, educational, and socially interactive. But for those who are unable to use traditional methods of computer input such as a mouse and keyboard, there is a barrier to interaction that they must first overcome. Eye-gaze interaction is one method of input that can potentially achieve the levels of interaction required for these games. How we use eye-gaze or the gaze interaction technique depends upon the task being performed, the individual performing it, and the equipment available. To fully realize the impact of participation in these environments, techniques need to be adapted to the person’s abilities. We describe an approach to designing and adapting a gaze interaction technique to support locomotion, a task central to immersive game playing. This is evaluated by a group of young people with cerebral palsy and muscular dystrophy. The results show that by adapting the interaction technique, participants are able to significantly improve their in-game character control.

Hyrskykari, A., Istance, H., and Vickers, S. (2012) Gaze gestures or dwell-based interaction? In Proceedings of the Symposium on Eye Tracking Research and Applications (ETRA ’12). ACM, pp. 229–232. DOI: 10.1145/2168556.2168602

Hyrskykari, A., Istance, H., and Vickers, S. (2012) Gaze gestures or dwell-based interaction? In Proceedings of the Symposium on Eye Tracking Research and Applications (ETRA ’12). ACM, pp. 229–232. DOI: 10.1145/2168556.2168602

The two cardinal problems recognized with gaze-based interaction techniques are: how to avoid unintentional commands, and how to overcome the limited accuracy of eye tracking. Gaze gestures are a relatively new technique for giving commands, which has the potential to overcome these problems. We present a study that compares gaze gestures with dwell selection as an interaction technique. The study involved 12 participants and was performed in the context of using an actual application. The participants gave commands to a 3D immersive game using gaze gestures and dwell icons. We found that gaze gestures are not only a feasible means of issuing commands in the course of game play, but they also exhibited performance that was at least as good as or better than dwell selections. The gesture condition produced less than half of the errors when compared with the dwell condition. The study shows that gestures provide a robust alternative to dwell-based interaction with the reliance on positional accuracy being substantially reduced.

Špakov, O., and Majaranta, P. (2012) Enhanced Gaze Interaction Using Simple Head Gestures. In Proceeding of the 14th International Conference on Ubiquitous Computing (UbiComp`12: PETMEI 2012), ACM, pp. 705-710. DOI: 10.1145/2370216.2370369

Špakov, O., and Majaranta, P. (2012) Enhanced Gaze Interaction Using Simple Head Gestures. In Proceeding of the 14th International Conference on Ubiquitous Computing (UbiComp`12: PETMEI 2012), ACM, pp. 705-710. DOI: 10.1145/2370216.2370369

We propose a combination of gaze pointing and head gestures for enhanced hands-free interaction. Instead of the traditional dwell-time selection method, we experimented with five simple head gestures: nodding, turning left/right, and tilting left/right. The gestures were detected from the eye-tracking data by a range-based algorithm, which was found accurate enough in recognizing nodding and left-directed gestures. The gaze estimation accuracy did not noticeably suffer from the quick head motions. Participants pointed to nodding as the best gesture for occasional selections tasks and rated the other gestures as promising methods for navigation (turning) and functional mode switching (tilting). In general, dwell time works well for repeated tasks such as eye typing. However, considering multimodal games or transient interactions in pervasive and mobile environments, we believe a combination of gaze and head interaction could potentially provide a natural and more accurate interaction method.

Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies (2011), Majaranta, P., Aoki, H., Donegan, M., Hansen, D.W., Hansen, J.P. Hyrskykari, A., & Räihä, K-J. IGI Global. DOI: 10.4018/978-1-61350-098-9

Recent advances in eye tracking technology will allow for a proliferation of new applications. Improvements in interactive methods using eye movement and gaze control could result in faster and more efficient human computer interfaces, benefitting users with and without disabilities.

Gaze Interaction and Applications of Eye Tracking: Advances in Assistive Technologies focuses on interactive communication and control tools based on gaze tracking, including eye typing, computer control, and gaming, with special attention to assistive technologies. For researchers and practitioners interested in the applied use of gaze tracking, the book offers instructions for building a basic eye tracker from off-the-shelf components, gives practical hints on building interactive applications, presents smooth and efficient interaction techniques, and summarizes the results of effective research on cutting edge gaze interaction applications.

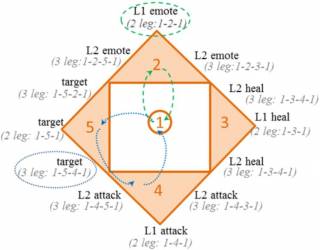

Istance, H., Hyrskykari, A., Immonen, L., Mansikkamaa, S., and Vickers, S. (2010) Designing gaze gestures for gaming: an investigation of performance. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications (ETRA ’10), ACM, pp. 323–330. DOI: 10.1145/1743666.1743740

Istance, H., Hyrskykari, A., Immonen, L., Mansikkamaa, S., and Vickers, S. (2010) Designing gaze gestures for gaming: an investigation of performance. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications (ETRA ’10), ACM, pp. 323–330. DOI: 10.1145/1743666.1743740

To enable people with motor impairments to use gaze control to play online games and take part in virtual communities, new interaction techniques are needed that overcome the limitations of dwell clicking on icons in the games interface. We have investigated gaze gestures as a means of achieving this. We report the results of an experiment with 24 participants that examined performance differences between different gestures. We were able to predict the effect on performance of the numbers of legs in the gesture and the primary direction of eye movement in a gesture. We also report the outcomes of user trials in which 12 experienced gamers used the gaze gesture interface to play World of Warcraft. All participants were able to move around and engage other characters in fighting episodes successfully. Gestures were good for issuing specific commands such as spell casting, and less good for continuous control of movement compared with other gaze interaction techniques we have developed.

Majaranta, P., Ahola, U.-K., and Špakov, O. (2009) Fast Gaze Typing with an Adjustable Dwell Time. In Proceedings of the Conference on Human Factors in Computing Systems, CHI’09, ACM, pp. 357-360. DOI: 10.1145/1518701.1518758

Majaranta, P., Ahola, U.-K., and Špakov, O. (2009) Fast Gaze Typing with an Adjustable Dwell Time. In Proceedings of the Conference on Human Factors in Computing Systems, CHI’09, ACM, pp. 357-360. DOI: 10.1145/1518701.1518758

Previous research shows that text entry by gaze using dwell time is slow, about 5-10 words per minute (wpm). These results are based on experiments with novices using a constant dwell time, typically between 450 and 1000 ms. We conducted a longitudinal study to find out how fast novices learn to type by gaze using an adjustable dwell time. Our results show that the text entry rate increased from 6.9 wpm in the first session to 19.9 wpm in the tenth session. Correspondingly, the dwell time decreased from an average of 876 ms to 282 ms, and the error rates decreased from 1.28% to .36%. The achieved typing speed of nearly 20 wpm is comparable with the result of 17.3 wpm achieved in an earlier, similar study with Dasher.

Isokoski P., Joos, M., Špakov, O., and Martin, B. (2009) Gaze Controlled Games. In Stephanidis, C., Majaranta, P., & Bates, R. (eds.) Universal Access in the Information Society: Communication by Gaze Interaction, 8(4), Springer, pp. 323-337. 10.1007/s10209-009-0146-3

Isokoski P., Joos, M., Špakov, O., and Martin, B. (2009) Gaze Controlled Games. In Stephanidis, C., Majaranta, P., & Bates, R. (eds.) Universal Access in the Information Society: Communication by Gaze Interaction, 8(4), Springer, pp. 323-337. 10.1007/s10209-009-0146-3

The quality and availability of eye tracking equipment has been increasing while costs have been decreasing. These trends increase the possibility of using eye trackers for entertainment purposes. Games that can be controlled solely through movement of the eyes would be accessible to persons with decreased limb mobility or control. On the other hand, use of eye tracking can change the gaming experience for all players, by offering richer input and enabling attention-aware games. Eye tracking is not currently widely supported in gaming, and games specifically developed for use with an eye tracker are rare. This paper reviews past work on eye tracker gaming and charts future development possibilities in different sub-domains within. It argues that based on the user input requirements and gaming contexts, conventional computer games can be classified into groups that offer fundamentally different opportunities for eye tracker input. In addition to the inherent design issues, there are challenges and varying levels of support for eye tracker use in the technical implementations of the games.

Hyrskykari, A., Ovaska, S., Majaranta, P., Räihä, K.-J., and Lehtinen, M. (2008) Gaze Path Stimulation in Retrospective Think-Aloud. Journal of Eye Movement Research, 2(4). DOI: 10.16910/jemr.2.4.5

Hyrskykari, A., Ovaska, S., Majaranta, P., Räihä, K.-J., and Lehtinen, M. (2008) Gaze Path Stimulation in Retrospective Think-Aloud. Journal of Eye Movement Research, 2(4). DOI: 10.16910/jemr.2.4.5

For a long time, eye tracking has been thought of as a promising method for usability testing. During the last couple of years, eye tracking has finally started to live up to these expectations, at least in terms of its use in usability laboratories. We know that the user’s gaze path can reveal usability issues that would otherwise go unnoticed, but a common understanding of how best to make use of eye movement data has not been reached. Many usability practitioners seem to have intuitively started to use gaze path replays to stimulate recall for retrospective walk through of the usability test. We review the research on think-aloud protocols in usability testing and the use of eye tracking in the context of usability evaluation. We also report our own experiment in which we compared the standard, concurrent think-aloud method with the gaze path stimulated retrospective think-aloud method. Our results suggest that the gaze path stimulated retrospective think-aloud method produces more verbal data, and that the data are more informative and of better quality as the drawbacks of concurrent think-aloud have been avoided.

Istance, H., Bates, R., Hyrskykari, A., and Vickers, S. (2008) Snap clutch, a moded approach to solving the Midas touch problem. In Proceedings of the 2008 symposium on Eye tracking research & applications (ETRA ’08), ACM, pp. 221–228. DOI: 10.1145/1344471.1344523

Istance, H., Bates, R., Hyrskykari, A., and Vickers, S. (2008) Snap clutch, a moded approach to solving the Midas touch problem. In Proceedings of the 2008 symposium on Eye tracking research & applications (ETRA ’08), ACM, pp. 221–228. DOI: 10.1145/1344471.1344523

This paper proposes a simple approach to an old problem, that of the ‘Midas Touch’. This uses modes to enable different types of mouse behavior to be emulated with gaze and by using gestures to switch between these modes. A light weight gesture is also used to switch gaze control off when it is not needed, thereby removing a major cause of the problem. The ideas have been trialed in Second Life, which is characterized by a feature-rich of set of interaction techniques and a 3D graphical world. The use of gaze with this type of virtual community is of great relevance to severely disabled people as it can enable them to be in the community on a similar basis to able-bodied participants. The assumption here though is that this group will use gaze as a single modality and that dwell will be an important selection technique. The Midas Touch Problem needs to be considered in the context of fast dwell-based interaction. The solution proposed here, Snap Clutch, is incorporated into the mouse emulator software. The user trials reported here show this to be a very promising way in dealing with some of the interaction problems that users of these complex interfaces face when using gaze by dwell.

Tuisku, O., Majaranta, P., Isokoski, I., and Räihä, K-J. (2008) Now Dasher! Dash away! longitudinal study of fast text entry by Eye Gaze. In Proceedings of the 2008 symposium on Eye tracking research & applications (ETRA ’08), ACM, pp. 19–26. DOI: 10.1145/1344471.1344476

Tuisku, O., Majaranta, P., Isokoski, I., and Räihä, K-J. (2008) Now Dasher! Dash away! longitudinal study of fast text entry by Eye Gaze. In Proceedings of the 2008 symposium on Eye tracking research & applications (ETRA ’08), ACM, pp. 19–26. DOI: 10.1145/1344471.1344476

Dasher is one of the best known inventions in the area of text entry in recent years. It can be used with many input devices, but studies on user performance with it are still scarce. We ran a longitudinal study where 12 participants transcribed Finnish text with Dasher in ten 15-minute sessions using a Tobii 1750 eye tracker as a pointing device. The mean text entry rate was 2.5 wpm during the first session and 17.3 wpm during the tenth session. Our results show that very high text entry rates can be achieved with eye-operated Dasher, but only after several hours of training.

Majaranta, P., MacKenzie, I.S., Aula, A., and Räihä, K-J. (2006) Effects of feedback and dwell time on eye typing speed and accuracy. Universal Access in the Information Society, 5, 199–208. DOI: 10.1007/s10209-006-0034-z

Eye typing provides a means of communication that is especially useful for people with disabilities. However, most related research addresses technical issues in eye typing systems, and largely ignores design issues. This paper reports experiments studying the impact of auditory and visual feedback on user performance and experience. Results show that feedback impacts typing speed, accuracy, gaze behavior, and subjective experience. Also, the feedback should be matched with the dwell time. Short dwell times require simplified feedback to support the typing rhythm, whereas long dwell times allow extra information on the eye typing process. Both short and long dwell times benefit from combined visual and auditory feedback. Six guidelines for designing feedback for gaze-based text entry are provided.

Siirtola, H. and Räihä, K-J. (2006) Interacting with parallel coordinates. Interacting with Computers, 18(6), pp. 1278–1309. DOI: 10.1016/j.intcom.2006.03.006

Siirtola, H. and Räihä, K-J. (2006) Interacting with parallel coordinates. Interacting with Computers, 18(6), pp. 1278–1309. DOI: 10.1016/j.intcom.2006.03.006

Parallel coordinate visualizations have a reputation of being difficult to understand, expert-only representations. We argue that this reputation may be partially unfounded, because many of the parallel coordinate browser implementations lack essential features. This paper presents a survey of current interaction techniques for parallel coordinate browsers and compares them to the visualization design guidelines in the literature. In addition, we report our experiences with parallel coordinate browser prototypes, and describe an experiment where we studied the immediate usability of parallel coordinate visualizations. In the experiment, 16 database professionals performed a set of tasks both with the SQL query language and a parallel coordinate browser. The results show that although the subjects had doubts about the general usefulness of the parallel coordinate technique, they could perform the tasks more efficiently with a parallel coordinate browser than with their familiar query language interface.

Siirtola, H. and Mäkinen, E. (2005) Constructing and Reconstructing the Reorderable Matrix. Information Visualization. 4(1):32-48. DOI: 10.1057/palgrave.ivs.9500086

Siirtola, H. and Mäkinen, E. (2005) Constructing and Reconstructing the Reorderable Matrix. Information Visualization. 4(1):32-48. DOI: 10.1057/palgrave.ivs.9500086

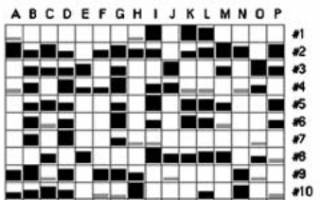

We consider the backgrounds, applications, implementations, and user interfaces of the reorderable matrix originally introduced by Jacques Bertin. As a new tool for handling the matrix, we propose a new kind of interface for interactive cluster analysis. As the main tool to order the rows and columns, we use the well-known barycenter heuristic. Two user tests are performed to verify the usefulness of the automatic tools.

Špakov, O., and Miniotas, D. (2005) Gaze-Based Selection of Standard-Size Menu Items. In Proceeding of International Conference on Mumtimodal Interfaces, ICMI’05, ACM, pp. 124-128. DOI: 10.1145/1088463.1088486

Špakov, O., and Miniotas, D. (2005) Gaze-Based Selection of Standard-Size Menu Items. In Proceeding of International Conference on Mumtimodal Interfaces, ICMI’05, ACM, pp. 124-128. DOI: 10.1145/1088463.1088486

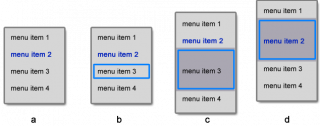

With recent advances in eye tracking technology, eye gaze gradually gains acceptance as a pointing modality. Its relatively low accuracy, however, determines the need to use enlarged controls in eye-based interfaces rendering their design rather peculiar. Another factor impairing pointing performance is deficient robustness of an eye tracker’s calibration. To facilitate pointing at standard-size menus, we developed a technique that uses dynamic target expansion for on-line correction of the eye tracker’s calibration. Correction is based on the relative change in the gaze point location upon the expansion. A user study suggests that the technique affords a dramatic six-fold improvement in selection accuracy. This is traded off against a much smaller reduction in performance speed (39%). The technique is thus believed to contribute to development of universal-access solutions supporting navigation through standard menus by eye gaze alone.

Surakka, V., Illi, M., and Isokoski, P. (2004) Gazing and frowning as a new human–computer interaction technique. ACM Trans. Appl. Percept. 1, 1 (July 2004), 40–56. DOI: 10.1145/1008722.1008726

Surakka, V., Illi, M., and Isokoski, P. (2004) Gazing and frowning as a new human–computer interaction technique. ACM Trans. Appl. Percept. 1, 1 (July 2004), 40–56. DOI: 10.1145/1008722.1008726

The present aim was to study a new technique for human–computer interaction. It combined the use of two modalities, voluntary gaze direction and voluntary facial muscle activation for object pointing and selection. Fourteen subjects performed a series of pointing tasks with the new technique and with a mouse. At short distances the mouse was significantly faster than the new technique. However, there were no statistically significant differences at medium and long distances between the techniques. Fitts’ law analyses were performed both by using only error-free trials and using also data including error trials (i.e., effective target width). In all cases both techniques seemed to follow Fitts’ law, although for the new technique the effective target width correlation coefficient was smaller R = 0.776 than for the mouse R = 0.991. The regression slopes suggested that at very long distances (i.e., beyond 800 pixels) the new technique might be faster than the mouse. The new technique showed promising results already after a short practice and in the future it could be useful especially for physically challenged persons.

Miniotas, D., Špakov, O., and MacKenzie, I. S. (2004) Eye Gaze Interaction with Expanding Targets. In Extended Abstracts of the Conference on Human Factors in Computing Systems (EA-CHI’04), ACM, pp. 1255-1258. DOI: 10.1145/985921.986037

Miniotas, D., Špakov, O., and MacKenzie, I. S. (2004) Eye Gaze Interaction with Expanding Targets. In Extended Abstracts of the Conference on Human Factors in Computing Systems (EA-CHI’04), ACM, pp. 1255-1258. DOI: 10.1145/985921.986037

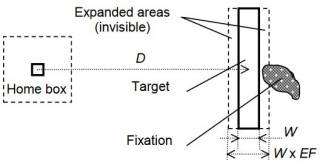

Recent evidence on the performance benefits of expanding targets during manual pointing raises a provocative question: Can a similar effect be expected for eye gaze interaction? We present two experiments to examine the benefits of target expansion during an eye-controlled selection task. The second experiment also tested the efficiency of a “grab-and-hold algorithm” to counteract inherent eye jitter. Results confirm the benefits of target expansion both in pointing speed and accuracy. Additionally, the grab-and-hold algorithm affords a dramatic 57% reduction in error rates overall. The reduction is as much as 68% for targets subtending 0.35 degrees of visual angle. However, there is a cost which surfaces as a slight increase in movement time (10%). These findings indicate that target expansion coupled with additional measures to accommodate eye jitter has the potential to make eye gaze a more suitable input modality.

Majaranta, P., and Räihä, K-J. (2002) Twenty years of eye typing: systems and design issues. In Proceedings of the 2002 symposium on Eye tracking research & applications (ETRA ’02), ACM, pp. 15–22. DOI: 10.1145/507072.507076

Majaranta, P., and Räihä, K-J. (2002) Twenty years of eye typing: systems and design issues. In Proceedings of the 2002 symposium on Eye tracking research & applications (ETRA ’02), ACM, pp. 15–22. DOI: 10.1145/507072.507076

Eye typing provides a means of communication for severely handicapped people, even those who are only capable of moving their eyes. This paper considers the features, functionality and methods used in the eye typing systems developed in the last twenty years. Primary concerned with text production, the paper also addresses other communication related issues, among them customization and voice output.

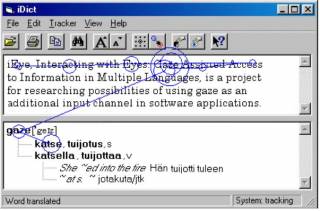

Hyrskykari, A., Majaranta, P., Aaltonen, A., & Räihä, K-J. (2000) Design Issues of iDict: A Gaze-Assisted Translation Aid. In S. N. Spencer (Ed.), Proceedings of the Symposium in Eye Tracking Research and Applications (ETRA 2000), ACM, pp. 9-14. DOI: 10.1145/355017.355019

Hyrskykari, A., Majaranta, P., Aaltonen, A., & Räihä, K-J. (2000) Design Issues of iDict: A Gaze-Assisted Translation Aid. In S. N. Spencer (Ed.), Proceedings of the Symposium in Eye Tracking Research and Applications (ETRA 2000), ACM, pp. 9-14. DOI: 10.1145/355017.355019

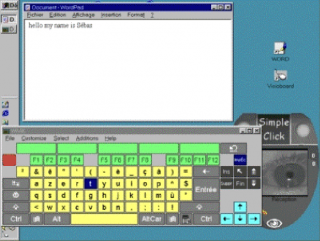

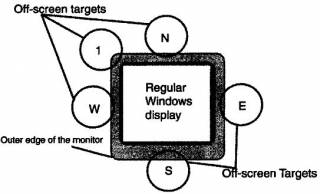

Isokoski, P. (2000) Text input methods for eye trackers using off-screen targets. In Proceedings of the 2000 symposium on Eye tracking research & applications (ETRA ’00), ACM, pp. 15–21. DOI: 10.1145/355017.355020

Isokoski, P. (2000) Text input methods for eye trackers using off-screen targets. In Proceedings of the 2000 symposium on Eye tracking research & applications (ETRA ’00), ACM, pp. 15–21. DOI: 10.1145/355017.355020

Text input with eye trackers can be implemented in many ways such as on-screen keyboards or context sensitive menu-selection techniques. We propose the use of off-screen targets and various schemes for decoding target hit sequences into text. Off-screen targets help to avoid the Midas’ touch problem and conserve display area. However, the number and location of the off-screen targets is a major usability issue. We discuss the use of Morse code, our Minimal Device Independent Text Input Method (MDITIM), QuikWriting, and Cirrin-like target arrangements. Furthermore, we describe our experience with an experimental system that implements eye tracker controlled MDITIM for the Windows environment.

Siirtola, H. (2000) Direct manipulation of parallel coordinates. IEEE Conference on Information Visualization. An International Conference on Computer Visualization and Graphics, pp. 373-378, DOI: 10.1109/IV.2000.859784

Siirtola, H. (2000) Direct manipulation of parallel coordinates. IEEE Conference on Information Visualization. An International Conference on Computer Visualization and Graphics, pp. 373-378, DOI: 10.1109/IV.2000.859784

This paper introduces two novel techniques to manipulate parallel coordinates. Both techniques are dynamic in nature as they encourage one to experiment and discover new information through interacting with a data set. The first technique, polyline averaging, makes it possible to dynamically summarise a set of polylines and can hence replace computationally much more demanding methods, such as hierarchical clustering. The other new technique interactively visualises correlation coefficients between polyline subsets, helping the user to discover new information in the data set. Both techniques are implemented in a Java-based parallel coordinate browser. In conclusion, examples of the use of these techniques in visual data mining are also explored.

For full publication lists, please check the team members’ Google Scholar pages:

Aulikki Hyrskykari, Poika Isokoski, Howell Istance, Jari Kangas, Päivi Majaranta, Saila Ovaska, Harri Siirtola, Kari-Jouko Räihä, Oleg Špakov

Dissertations supervised by VIRG members:

Roope Raisamo, Juha Lehikoinen, Markku Turunen, Mika Käki, Anne Aula, Aulikki Hyrskykari, Johanna Höysniemi, Jaakko Hakulinen, Harri Siirtola, Oleg Špakov, Päivi Majaranta, Ying Liu, Tomi Heimonen, Juha Leino, Selina Sharmin, Deepak Akkil,