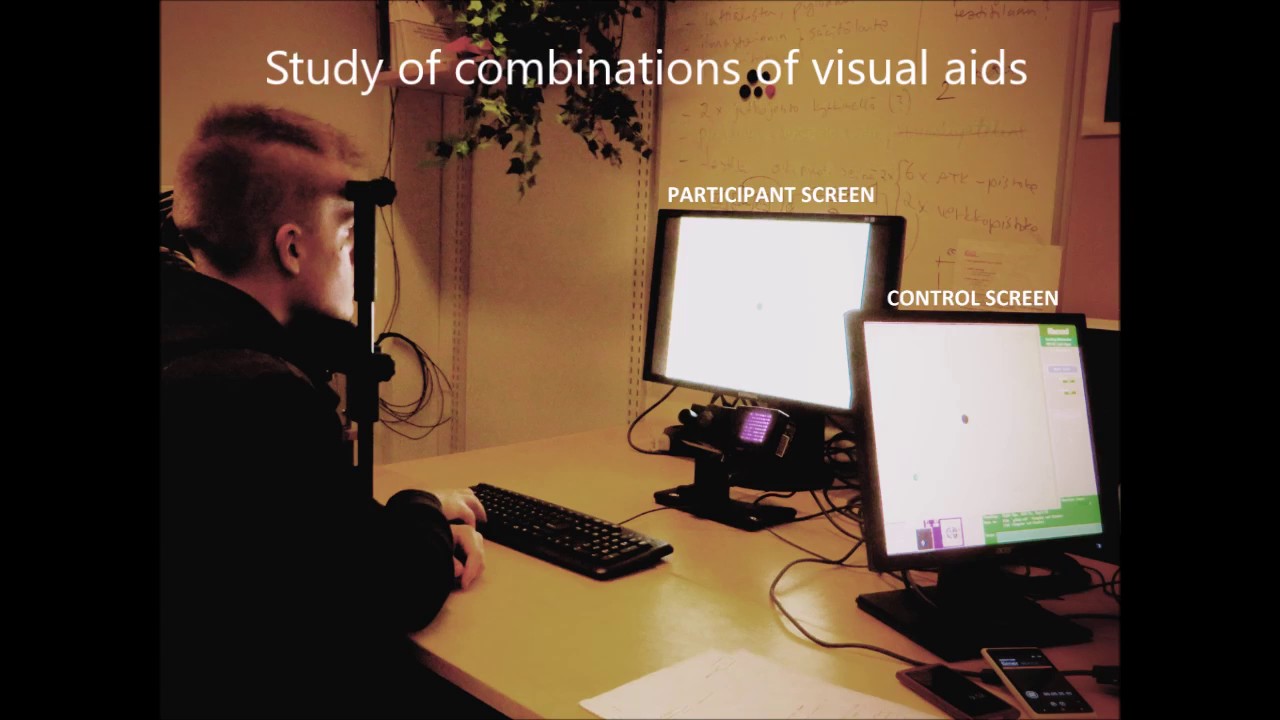

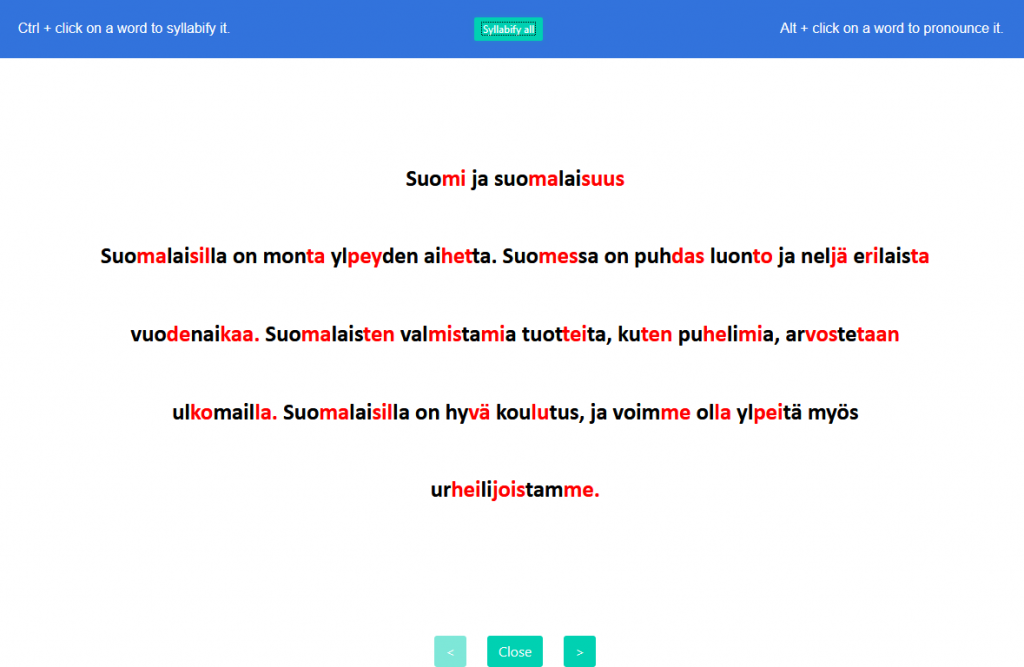

Silko is a web application developed by researchers of the University of Tampere. The application allows schoolteachers to prepare online reading tasks for their students and then inspect their reading process. Students complete tasks with an eye tracker connected to their PC. They may get reading support, like long-gazed words hyphenated as they read, if the teacher has enabled this feature for the task. Teachers may also request students to complete a questionnaire after the reading task. A questionnaire may contain general question about the task text to check student’s comprehension, or be related to specific words if they were gazed at long enough.

Several visualizations of students’ gaze paths are available for teachers. They may inspect an individual gaze path in a traditional gaze-plot visualization, or compare reading performance of several students by replaying their gaze paths or word focusing events. Teachers may analyze numerical indicators, such as reading speed, number of reading regressions, average fixation duration and so on. In addition, another statistics shows to the teacher the words that caused reading difficulties for most students.