Projects

Short summaries of the research plans of selected projects

Visual Interaction Research Group

Short summaries of the research plans of selected projects

The Private and Shared Gaze: Enablers, Applications, Experiences (GaSP) project aims at developing and studying demonstrators that are made possible by the rapid spreading of eye tracking devices. The applications span from personal information management and web browsing aids to educational applications that make use of gaze-contingent digital lecture material. The intended outcomes of the project include publicly available software that enables gaze data collection, a methodology for remote usability testing with eye tracking, and a demonstrator of the potential of gaze data in the educational context. The project partners are the Humanities Lab at Lund University and the American University in Paris.

The Private and Shared Gaze: Enablers, Applications, Experiences (GaSP) project aims at developing and studying demonstrators that are made possible by the rapid spreading of eye tracking devices. The applications span from personal information management and web browsing aids to educational applications that make use of gaze-contingent digital lecture material. The intended outcomes of the project include publicly available software that enables gaze data collection, a methodology for remote usability testing with eye tracking, and a demonstrator of the potential of gaze data in the educational context. The project partners are the Humanities Lab at Lund University and the American University in Paris.

The project was funded by the Academy of Finland.

The main objective of the Mind, Image, Picture (MIPI) project is to answer the question “how do we look at pictures and what is their role in the constitution of the human mind.” In short, the project investigates how pictures become meaningful to the user, and how do cultural meanings and signification emerge in the process of visual perception.

MIPI stands at the crossroads of different disciplines and draws on visual culture studies, cognitive vision studies and computer science. By using psychophysical methods, qualitative data collection on line, computational data analysis, and eye-tracking technologies we will gather experimental data on how people actually view different pictures. This empirical data will be interpreted from many different theoretical viewpoints in order to shed light on how pictures come meaningful for people. Together with Tampere Research Centre for Journalism, Media, and Communication (COMET) at the University of Tampere and the Psychology of Evolving Media and Technology Research Group at the university of Helsinki we will first develop the methods for observing the emergence of meaning and then proceed to experimentation to better understand the phenomenon.

The project was funded by the Academy of Finland.

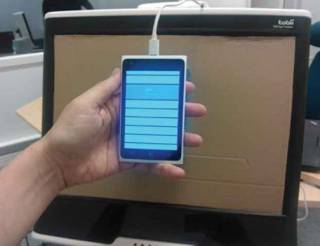

The Haptic-Gaze Interaction (HAGI) project works in the field of human-technology interaction, more specifically multimodal interaction techniques. The project began with basic research on combining of eye-pointing and haptic interaction. The basic research findings were then applied in the constructive research process in two areas: augmentative and alternative communication systems and interaction with mobile devices. It was expected that haptic interaction will make it possible to apply gaze-based user interfaces in mobile devices much better than what has been possible in the past, and therefore open new opportunities for natural and efficient interaction.

The Haptic-Gaze Interaction (HAGI) project works in the field of human-technology interaction, more specifically multimodal interaction techniques. The project began with basic research on combining of eye-pointing and haptic interaction. The basic research findings were then applied in the constructive research process in two areas: augmentative and alternative communication systems and interaction with mobile devices. It was expected that haptic interaction will make it possible to apply gaze-based user interfaces in mobile devices much better than what has been possible in the past, and therefore open new opportunities for natural and efficient interaction.

HAGI was a joint project with MMIG, funded by the Academy of Finland. We published 25 peer-reviewed scientific article to report the outcomes of the project in international venues.

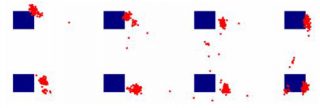

The aim of this project is to study eye-gaze data processing methods for the development of native gaze-contingent interfaces. The main focus was on discovering new algorithms that would support efficient access to several operational modes and switching between them. These algorithms must be tolerant to noise in gaze direction measurement. The challenge for the development of native gaze-contingent interfaces is the demand for convenient acquisition and manipulation of closely located objects using stateless noisy input.

The main objectives of the study are to 1) discriminate, analyze and improve gaze-pointing algorithms, 2) develop natural and fast methods for switching between gaze-operated modes, 3) model a new concept of widgets for native gaze-contingent interfaces, and 4) develop a new application with native gaze-contingent interface and study the impact of awareness about other users’ gaze paths. The practical aim of the study is to develop new technological solutions and to improve the accessibility of communication with machines via gaze. The study will contribute to deeper understanding of the impact of awareness of online partner’s object-of-attention in various virtual environments.

The project was funded by the Academy of Finland.

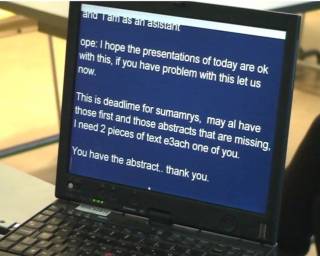

The aim of the project (shortly, Speech-to-Text) is to study the real-time transmission between two communication modes, speech and writing in human interaction with a method called “print interpreting”. It means translation of spoken language and accompanying significant audible information into written text simultaneously with the talk. The text is typed on a computer and displayed on a screen where the letter-by-letter emerging text is visible. Print interpreting is needed as a communication aid for people with hearing disability to give them access to the speech.

The aim of the project (shortly, Speech-to-Text) is to study the real-time transmission between two communication modes, speech and writing in human interaction with a method called “print interpreting”. It means translation of spoken language and accompanying significant audible information into written text simultaneously with the talk. The text is typed on a computer and displayed on a screen where the letter-by-letter emerging text is visible. Print interpreting is needed as a communication aid for people with hearing disability to give them access to the speech.

The objectives of the study are 1) to investigate the process of print interpreting; 2) the comprehensibility of the interpretation; and 3) to develop new technology and methods for analyzing and supporting print interpreting. TAUCHI focuses on the third objective. For analysis we use eye tracking to study how different ways of presenting the textual interpretation are perceived. Based on the results the goal is to develop a new tool that helps the interpreters in producing text in a more easily perceived form. The joint project is led by Professor Liisa Tiittula from the School of Modern Languages and Translation Studies at the University of Tampere.

This project is funded by the Academy of Finland in the MOTIVE Research Programme (Ubiquitous computing and diversity of communication).

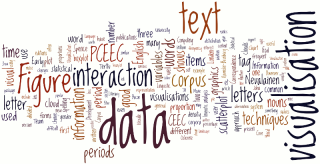

Communication in the modern world is more versatile than ever. Written language can vary from novels and newspaper articles to instant messaging and personal letters, while spoken language ranges from formal speeches and interviews to mobile and face-to-face conversations. The Data mining tools for changing modalities of communication (DAMMOC) project is about analysing and comparing language use in different contexts. Using state-of-the-art techniques from data mining and information visualisation, we are developing new tools and methods for studying this enormous variety of linguistic communication.

Communication in the modern world is more versatile than ever. Written language can vary from novels and newspaper articles to instant messaging and personal letters, while spoken language ranges from formal speeches and interviews to mobile and face-to-face conversations. The Data mining tools for changing modalities of communication (DAMMOC) project is about analysing and comparing language use in different contexts. Using state-of-the-art techniques from data mining and information visualisation, we are developing new tools and methods for studying this enormous variety of linguistic communication.

The project was based on intensive collaboration in the multidisciplinary consortium. The role of TAUCHI was to develop suitable visualizations and tools for interacting with the visualizations. As the most important result of this task, a tool named Text Variation Explorer was developed to demonstrate the aforementioned requirements. The tool allows visual and interactive examining of the behaviour of linguistic parameters affected by text window size and overlap, and in addition, performs interactive principal component analysis based on a user–given set of words.

The project was funded by the Academy of Finland.

The Privacy in the Making project (PRIMA) studies the ways in which penetration, development and use of IT (e.g., ubiquitous interaction, augmented reality, self-made media) have affected, affect and possibly will affect different stakeholders in sociotechnical settings giving rise to privacy protection issues. Its interest concerns how privacy is managed and how conflicts that arise can be coped with by actors themselves, solved by regulation of different kinds (e.g., policies, binding corporate rules, and statutes) or can be handled by various technical means. The putting together of toolboxes containing standard appliances for dealing with privacy problems has been pointed out as essential. The PRIMA project shares this view and acknowledges the value of familiarizing researchers with the needs and how they can be met in different contexts.

The Privacy in the Making project (PRIMA) studies the ways in which penetration, development and use of IT (e.g., ubiquitous interaction, augmented reality, self-made media) have affected, affect and possibly will affect different stakeholders in sociotechnical settings giving rise to privacy protection issues. Its interest concerns how privacy is managed and how conflicts that arise can be coped with by actors themselves, solved by regulation of different kinds (e.g., policies, binding corporate rules, and statutes) or can be handled by various technical means. The putting together of toolboxes containing standard appliances for dealing with privacy problems has been pointed out as essential. The PRIMA project shares this view and acknowledges the value of familiarizing researchers with the needs and how they can be met in different contexts.

The work at TAUCHI focused on concrete technological and regulatory privacy mechanisms in the context of future ICT. We carried out a subproject on “Awareness of People and Discussions While Protecting Privacy”. The system was developed and evaluated which uses speech and eye gaze as input signals. It aims at providing ambient information of the pulse and themes of meetings so that they can be sensed remotely, without compromising the privacy of the meeting participants.

The project was funded by Nordunet.

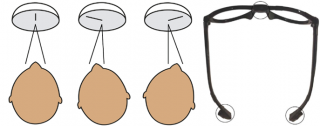

The project aims at investigating how translating from one natural language (L1) into (L2) another is performed by professional translator. It is planned to monitor the visual input in the source language (L1) with eye-tracking technology and log the translators’ keystrokes in the target language (L2) with the output monitoring tool called Translog. Brain performance during perceptual input and motor output monitored with a brain activity recording technique (EEG) is also in the scope of the project interests.

The project aims at investigating how translating from one natural language (L1) into (L2) another is performed by professional translator. It is planned to monitor the visual input in the source language (L1) with eye-tracking technology and log the translators’ keystrokes in the target language (L2) with the output monitoring tool called Translog. Brain performance during perceptual input and motor output monitored with a brain activity recording technique (EEG) is also in the scope of the project interests.

The task requirement – translation of identical semantic content from L1 into L2 – will guarantee the compatibility of input and output patterns at a certain level of structure and make it possible to compare and interpret output and input in terms of the complementary pair: comprehension and production. In addition, it is planned to prompt the interface between the working memory and long-term memory (LTM) of the bilingual translator with prompting feedback provided by a computerized tool and monitor brain performance during the prompting with EEG.

The project ultimate goal is to achieve an integrated experimental and application design that fulfils the requirements of a human-computer monitoring and feedback system. Building such a system will enable to study in depth the nature of a mind-brain-behaviour-computer interaction system with a complete-cycle mini-max set of variables. Such a system should find a range of industrial applications. Complete-cycle monitoring offers the possibility of providing its operator with feedback in different combinations for different purposes, e.g. with automatic prompting of her/his LTM for fast finding of means of expression in L2. Human-computer systems for feedback in cognitive terms are already within our reach if targeted with a systematic strategy for research and application.

This work has been funded by the European Commission via the Information Society Technologies Programme (IST) within the Sixth RTD Framework Programme.

The COGAIN network gathers European researchers, developers and specialists for the benefit of users with disabilities. By combining forces, we aim to develop new gaze-based systems that not only enable independent communication but also make life easier and more fun. The EC funded project ended in 2009 but the network continues in the form of an association.

The COGAIN network gathers European researchers, developers and specialists for the benefit of users with disabilities. By combining forces, we aim to develop new gaze-based systems that not only enable independent communication but also make life easier and more fun. The EC funded project ended in 2009 but the network continues in the form of an association.

The network integrates cutting-edge expertise on interface technologies for the benefit of users with disabilities. COGAIN belongs to the eInclusion strategic objective of IST and focuses on improving the quality of life for those whose life is impaired by motor-control disorders, such as ALS or CP. Its assistive technologies will empower the target group to communicate by using the capabilities they have and by offering compensation for capabilities that are deteriorating. The users will be able to use applications that help them to be in control of the environment, or achieve a completely new level of convenience and speed in gaze-based communication. Using the technology developed in the network, text can be created quickly by eye typing, and it can be rendered with the user’s own voice. In addition to this, the network will provide entertainment applications for making the life of the users more enjoyable and more equal. COGAIN believes that assistive technologies serve best by providing applications that are both empowering and fun to use.

COGAIN aims at overcoming the tight coupling of hardware and software in current research and development work. Due to the fragmentation of the research activities, a given software system often works only with a particular eye tracking device. The same applies for tools of analysing the efficiency and the usability that are tailored only for a particular set-up. COGAIN will work for the modularisation and standardisation that will integrate the existing pieces into a portfolio of compatible and usable tools and devices.

The usability, comfort and take-up of the results will be ensured by the strong involvement of user communities in the project. Special activities are planned both for involving users in design and for spreading the results to those in need of the technology. COGAIN will also contribute to bringing down the price of eye tracking technology of existing devices and by developing alternatives for everyday use at home and on the move.

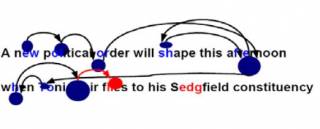

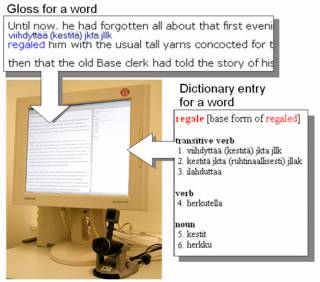

The overall objective of this project is to enable innovative and more natural forms of human-computer interaction by designing interfaces that utilise gaze-tracking, complemented by speech input in certain cases, in systems which respond and react to user eye movements. The project aims to pave the way for new applications of the technology by evaluating it in two prototype systems: iDict – a translation tool that tracks and is triggered by cues in the user’s gaze pattern and which can identify areas where they are having particular difficulties. In such cases, it will automatically propose additional information or take appropriate actions to help users. iTutor – a multimedia application to support maintenance activities where people need their hands free for other tasks. The application will communicate either automatically in response to the user’s gaze and/or via a speech interface and will also make use of wearable computing technology. The results of the project are expected to facilitate human computer interaction by making systems more aware of and more responsive to users.

The overall objective of this project is to enable innovative and more natural forms of human-computer interaction by designing interfaces that utilise gaze-tracking, complemented by speech input in certain cases, in systems which respond and react to user eye movements. The project aims to pave the way for new applications of the technology by evaluating it in two prototype systems: iDict – a translation tool that tracks and is triggered by cues in the user’s gaze pattern and which can identify areas where they are having particular difficulties. In such cases, it will automatically propose additional information or take appropriate actions to help users. iTutor – a multimedia application to support maintenance activities where people need their hands free for other tasks. The application will communicate either automatically in response to the user’s gaze and/or via a speech interface and will also make use of wearable computing technology. The results of the project are expected to facilitate human computer interaction by making systems more aware of and more responsive to users.

This work has been funded by the European Commission via the Information Society Technologies Programme (IST) within the Fifth RTD Framework Programme.

Notifications