The peak point of June for AI Hub was the joint workshop on audio and speech technologies. The hybrid workshop took place in newly opened Nokia Arena, Tampere as well as an online experience through Prospectum Live platform.

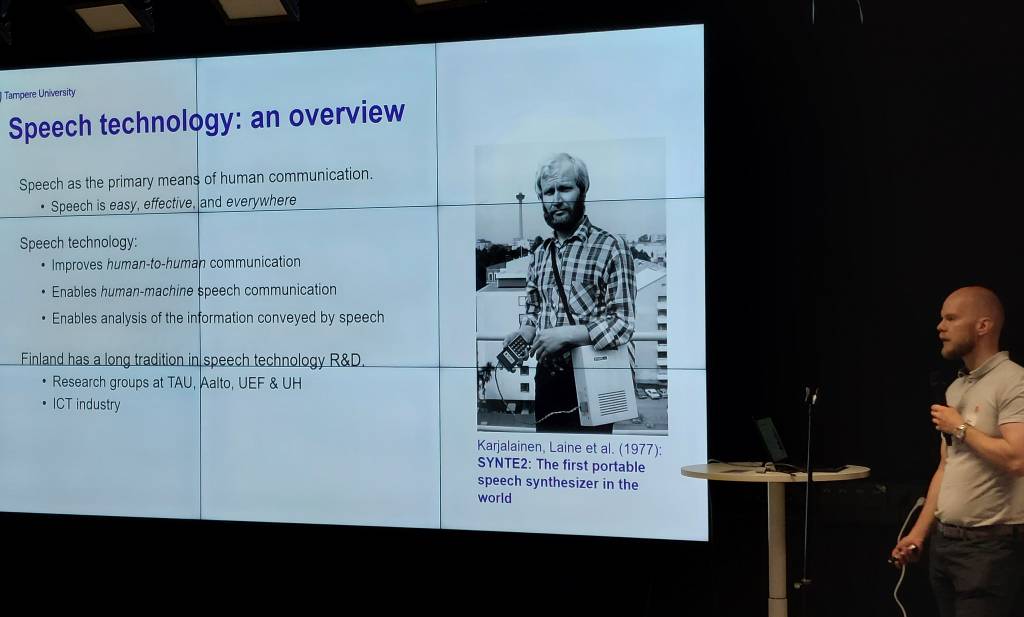

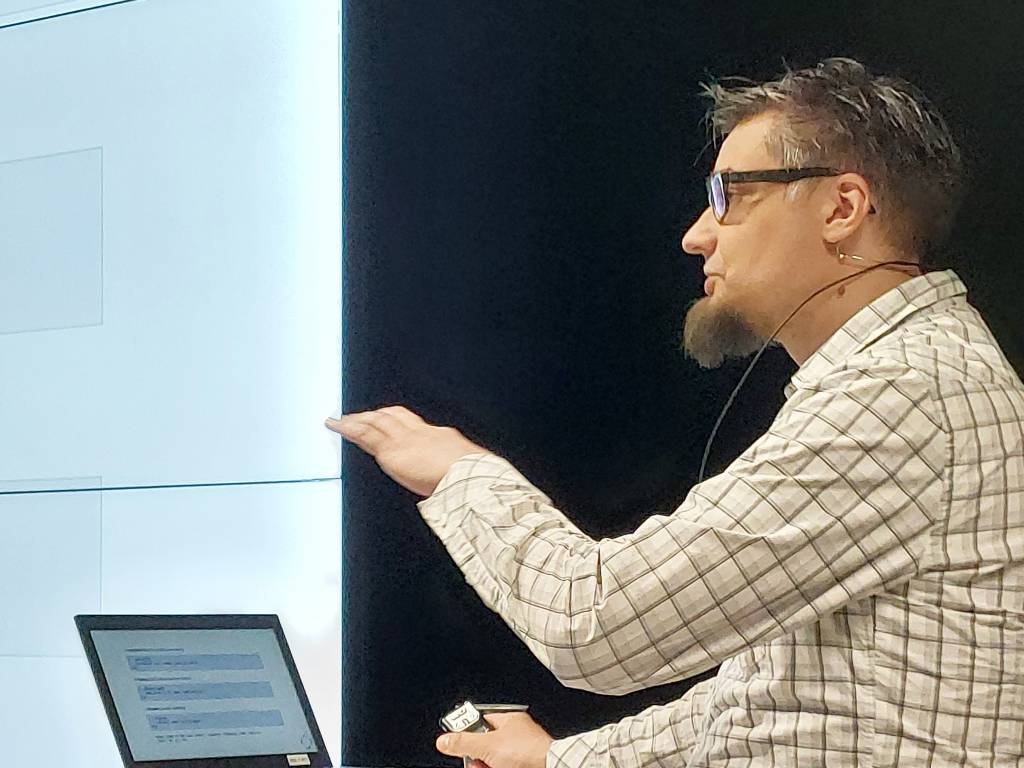

The organising team included globally leading experts studying these topics in Tampere University, as well as a few selected case study presentations from companies. Four people from AI Hub Tampere’s core team were present, as well as several speakers from Prof. Virtanen’s research group. Professor Virtanen himself opened the event, and later presented audio and speech processing on a technical level. The presentation introduced sound classification, self-supervised and contrastive machine learning as well as pre-trained models. His presentation brought up some opportunities, and also challenges the technology is currently facing. For example, source separation and echo still cause problems in the application. In the data collection phase, the annotation phase is very laborious. Yet, machine learning can solve multiple tasks simultaneously, and the technology opens opportunities for a wide range of new solutions and applications.

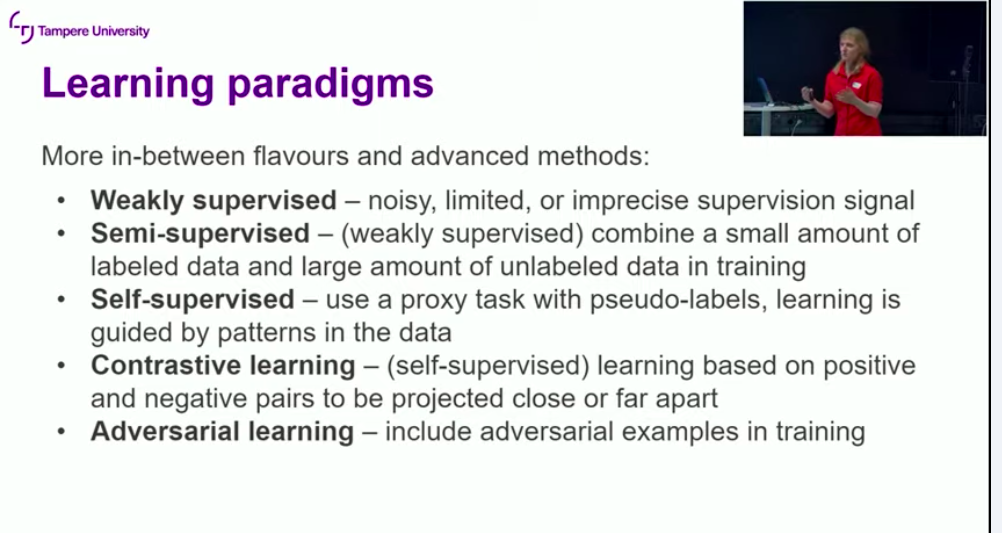

Annamaria Mesaros first presented an overview of applications in the field, some audio content analysis about what we, and our devices, perceive in everyday soundscapes. Later on, she introduced some machine learning methods, acoustic features, DNN topologies, learning paradigms and emerging approaches.

Okko Räsänen went through a content analysis of speech. Human speech contains several layers of information: prosodic, affective, and linguistic content, to mention a few. Speech can be used as an identity recognition or verification tool and as training material for language applications or robotics. Many of these applications are used in the health and wellbeing services.

Archontis Politis’ presentation was about spatial audio. It is used in planning and developing smart city technologies, such as sound monitoring, autonomous vehicles, smart homes and building engineering. Hearing aids and assisted hearing technologies have a significant impact on human wellbeing. Also, VR and other immersive media experiences rely on spatial audio.

Archontis Politis’ presentation was about spatial audio. It is used in planning and developing smart city technologies, such as sound monitoring, autonomous vehicles, smart homes and building engineering. Hearing aids and assisted hearing technologies have a significant impact on human wellbeing. Also, VR and other immersive media experiences rely on spatial audio.

Roel Pieters from AI Hub had a presentation on speech for human-robot collaboration. The presentation featured demos on voice-operated robots that learned to do tasks bit by bit by learning from single voice commands. These commands would include simple pairs of words, such as ‘action, location’.

Toni Heittola represented MARVEL EU project in the workshop and he talked about what the project framework has experimented on, and achieved with audio, visual and multimodal AI systems in Trento, Italy, Malta, and other European cities. The audio technology there has been used to detect anomalous and dangerous events. Heittola also walked the audience through a hands-on tutorial about AI for audio, which relied on convolutional neural network and a shared Python repository.

The afternoon session featured case studies from companies using audio and speech technologies in their products and services. Here are some case examples from the field:

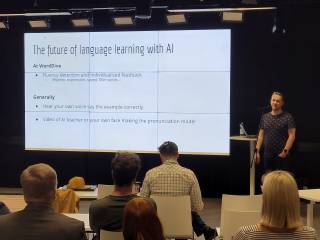

First, Nicolas Kivilinna of WordDive presented a use case of Language Learning Supported by AI. The language learning app analyses the speaker’s phonetic output and gives suggestions on how to improve their English pronunciation.

Secondly, Markku Salmela and Aino Koskimies of Meluta presented the company’s sound based situational awareness technology and Meluta-MAXtm, an intelligent signal processing platform.

Lauri Saikkonen presented Yousician, and the tech stack behind the software, which includes PyTorch and TensorFlow. The music learning app’s patented AI technology gives players real-time feedback while they are learning to play virtually for example with James Hetfield and Kirk Hammett.

AI Hub Tampere would like to thank all the presenters and the technical staff for the successful workshop, and the over 50 attendees for attending the workshop and bringing up interesting questions. If you’d like to cooperate with Tuomas Virtanen and his research group, please contact

tuomas.virtanen ( a ) tuni.fi or okko.rasanen ( a ) tuni.fi for the next steps.

You can attend AI Hub Tampere’s free workshops, join our mailing list, or inquire about our free pilot projects by mailing to

info.aihub ( a) tuni.fi

Text and photos by Ritva Savonsaari