Projects

Short summaries of the research plans of selected projects

Research Group for Emotions, Sociality and Computing

Short summaries of the research plans of selected projects

The field of user interfaces for industrial heavy machinery and passenger vehicles is rapidly evolving, with new advancements and innovations emerging every year. As technologies such as AI, AR, and autonomous systems continue to mature, we can expect to see even more sophisticated and user-friendly interfaces in the years to come. The aim of the MIXER project is to advance several research and manufacturing areas by creating novel technologies, applications and standards for in-vehicle interaction and remote situational awareness. Key benefit areas will include integrating current and upcoming technologies and tools to creating next-gen Driver and Occupant Management systems, Augmenting content to enhance situational awareness using AR / HUD displays, standardizing processes for advance ADAS systems and SAE levels of autonomy, developing AI voice assistants for in-cabin multimodal interaction, and defining connected vehicle infrastructure to create Vehicular Metaverse.

The field of user interfaces for industrial heavy machinery and passenger vehicles is rapidly evolving, with new advancements and innovations emerging every year. As technologies such as AI, AR, and autonomous systems continue to mature, we can expect to see even more sophisticated and user-friendly interfaces in the years to come. The aim of the MIXER project is to advance several research and manufacturing areas by creating novel technologies, applications and standards for in-vehicle interaction and remote situational awareness. Key benefit areas will include integrating current and upcoming technologies and tools to creating next-gen Driver and Occupant Management systems, Augmenting content to enhance situational awareness using AR / HUD displays, standardizing processes for advance ADAS systems and SAE levels of autonomy, developing AI voice assistants for in-cabin multimodal interaction, and defining connected vehicle infrastructure to create Vehicular Metaverse.

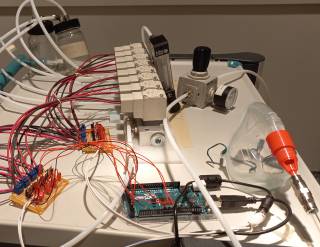

SMELLODI is an EIC Pathfinder Open funded project that aims to develop intelligent electronic sensors that can detect and digitally transmit healthy and pathologically altered body odours. The research team at Tampere University develops a self-learning odor display prototype. The aim is to study if a recipe for an unknown scent can be produced from known odor components using an electronic nose (eNose), an olfactory display, and optimization algorithms. Using the eNose, the odor display first measures the electronic fingerprint of the target odor. The first approximation of the target odor is reproduced by the olfactory display. The reproduced odor is measured again by the eNose and the two fingerprints are compared. Certain optimization methods (i.e. stochastic search algorithms) are used to iteratively improve the recipe of the reproduced odor to minimize the difference between the two responses. Human tests are also used to verify that the synthesized odor has high resemblance to the original one. The display can act as a terminal device for delivering odors transferred over the communication network.

SMELLODI is an EIC Pathfinder Open funded project that aims to develop intelligent electronic sensors that can detect and digitally transmit healthy and pathologically altered body odours. The research team at Tampere University develops a self-learning odor display prototype. The aim is to study if a recipe for an unknown scent can be produced from known odor components using an electronic nose (eNose), an olfactory display, and optimization algorithms. Using the eNose, the odor display first measures the electronic fingerprint of the target odor. The first approximation of the target odor is reproduced by the olfactory display. The reproduced odor is measured again by the eNose and the two fingerprints are compared. Certain optimization methods (i.e. stochastic search algorithms) are used to iteratively improve the recipe of the reproduced odor to minimize the difference between the two responses. Human tests are also used to verify that the synthesized odor has high resemblance to the original one. The display can act as a terminal device for delivering odors transferred over the communication network.

The project develops methods for machine understanding of the driving situation, particularly monitoring driver state (e.g., gaze direction, alertness). This facilitates optimizing presented information according to the situation and urgency. Another focus is multimodal interaction: information is shown on screens, as audio, or as haptic feedback. The chosen modality depends on the kind of information and the current driving situation. Completely new innovations would come from LIDAR sensing inside the car, which would improve monitoring humans’ state and enable new interaction methods.

The project develops methods for machine understanding of the driving situation, particularly monitoring driver state (e.g., gaze direction, alertness). This facilitates optimizing presented information according to the situation and urgency. Another focus is multimodal interaction: information is shown on screens, as audio, or as haptic feedback. The chosen modality depends on the kind of information and the current driving situation. Completely new innovations would come from LIDAR sensing inside the car, which would improve monitoring humans’ state and enable new interaction methods.

AMICI will validate technological solutions through prototypes and analyzing user interaction with them. One foreseen prototype expands car systems for driver and passenger use throughout the vehicle. The Origo steering wheel concept developed in the previous MIVI project forms the technological basis for this.

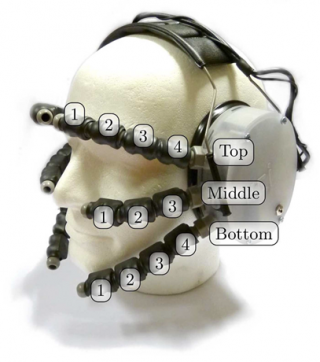

Construction of an olfactory display.

Construction of an olfactory display.

Cars have turned into computers-on-wheels. The modern car has dozens of computer systems taking care of engine control, safety systems or networked services. The number of these systems will only increase in the future, promising us safer, better, more enjoyable voyage. But how can we make sure that all these computer systems do not steal the driver’s attention? How to design interaction with car systems to feel effortless, intuitive, and non-distracting? How to give all the necessary information to drivers without overloading their brains? How to make it all safe? The MIVI project seeks answers to these questions, combining research findings with industrial R&D solutions.

Cars have turned into computers-on-wheels. The modern car has dozens of computer systems taking care of engine control, safety systems or networked services. The number of these systems will only increase in the future, promising us safer, better, more enjoyable voyage. But how can we make sure that all these computer systems do not steal the driver’s attention? How to design interaction with car systems to feel effortless, intuitive, and non-distracting? How to give all the necessary information to drivers without overloading their brains? How to make it all safe? The MIVI project seeks answers to these questions, combining research findings with industrial R&D solutions.

MIVI is a national project funded by Business Finland and the participating companies. The consortium consists of software vendors, hardware vendors, car designers and an interdisciplinary group of researchers.

The present aim is to develop and realize a programmable digital sensory reality world that enables

The present aim is to develop and realize a programmable digital sensory reality world that enables

creation of genuine olfactory experiences in VR. We will produce various odors and control their authenticity, intensity, dynamics, and timing so that the odors are accurately synchronized with other sensory information (audio and video) and with currently available means of interaction (e.g. wands, head and body turns, movement by teleportation). Following technology development in the project, we can measure odors from real odorous objects, classify them with AI/ML, and reproduce the odors while interacting in VR. The scent production device can also act as a terminal for transferring scents digitally. Our vision is that this will not only revolutionize the human-technology interaction but creates significant benefits for basic, clinical, and applied research.

To the best of our knowledge, no one has managed to program scents and integrate them to other sensory information with such precision and accuracy we are now proposing. Our innovations will pave the way for augmenting human senses by odors in VR realistically for the first time.

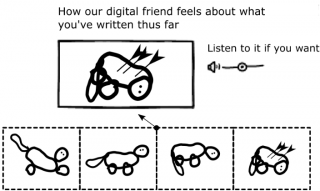

EmoDiM studies the role of emotions in technology-mediated interaction. We focus on understanding how the quality of discussion could be improved in digital media, especially in online news commenting. We design user interface mechanisms that could improve self-reflection and implicit emotion regulation, aiming to meaningfully intervene detrimental online behavior and rewarding positive behavior. We combine approaches of critical design and speculative design with experimental research of emotional processes.

EmoDiM studies the role of emotions in technology-mediated interaction. We focus on understanding how the quality of discussion could be improved in digital media, especially in online news commenting. We design user interface mechanisms that could improve self-reflection and implicit emotion regulation, aiming to meaningfully intervene detrimental online behavior and rewarding positive behavior. We combine approaches of critical design and speculative design with experimental research of emotional processes.

EmoDiM is funded by Academy of Finland.

Attention deficit hyperactivity disorder (ADHD) is the most prevalent neurodevelopmental disorder in children that manifests itself in problems with concentration, behaviour inhibition and overactive behavior. In the project, we aim at leveraging innovative technological solutions and computerised training games to enhance the existing means of ADHD treatment.

We believe that novel ways of interacting with computers by voluntarily controlled gaze, facial expressions, hand gestures, and body movements offer vast potential for delivering such devices and applications that can support rehabilitative processes of ADHD.

Because computers can measure behaviour automatically and fully objectively, we anticipate that the project will also contribute to the development of objective evaluation criteria and diagnostic tools of clinical assessment of ADHD.

Funded by Academy of Finland.

The Digital Scents (DIGITS) research project develops a system prototype which measures scents by an electric nose (eNose) and transforms them into digits. The scents can then be reproduced by using a carefully controlled self-learning scent synthesizer. In the future, synthesised scents can be perceived by people and even other machines around the world with the help of a digital communications network. The research lays the ground for developing systems that are able to transmit olfactory perceptions and experiences in real time everywhere in the world.

The Digital Scents (DIGITS) research project develops a system prototype which measures scents by an electric nose (eNose) and transforms them into digits. The scents can then be reproduced by using a carefully controlled self-learning scent synthesizer. In the future, synthesised scents can be perceived by people and even other machines around the world with the help of a digital communications network. The research lays the ground for developing systems that are able to transmit olfactory perceptions and experiences in real time everywhere in the world.

The project represents a new kind of human-technology interface and interface development with significant potential effects. Introducing scents in the predominantly audio-visual communications culture will diversify the use of communications networks. The implementation is based on interdisciplinary research covering chemical sensing, microfluidics, machine learning, psychology, interactive technology, and company collaboration. The work will cover the research, development, integration of sensor and actuator technology, and human testing of the system.

The Academy of Finland is funding the project as a part of the fourth theme, Advanced microsystems: from smart components to cyber-physical systems, in its research development and innovation programme ICT 2023. The total budget of the project is about EUR 1,3 million of which the Academy of Finland will fund EUR 900,000.

Mimetic interfaces refer to a new type of user interface technology in which computing and technical solutions are built to measure and imitate (mime) human behavioural functions so that lost functions can be restored digitally. The specific aim of the current project is to study and develop user interface technology especially targeted at improving and rehabilitation of face functionality (i.e. facial mimics) after unilateral facial paresis.Ultimately we envision technology that measures electric activity of facial muscles from the intact side of the face and routes this measured signal via small scale computing device to the affected side to stimulate the dysfunctional muscles. This represents a new type of user interface technology where an individual will be able to use his or her own bodily behavior to simultaneously control the behavior of other parts of the body. This means basically that a computer will be developed to act as a driver of human behavior. The project will be implemented by a multidisciplinary consortium involving expertise from computer science (especially man-machine interaction), psychology and psychophysiology of facial behavior, engineering, and medicine (specialists in clinical treatment of facial paresis).

Mimetic interfaces refer to a new type of user interface technology in which computing and technical solutions are built to measure and imitate (mime) human behavioural functions so that lost functions can be restored digitally. The specific aim of the current project is to study and develop user interface technology especially targeted at improving and rehabilitation of face functionality (i.e. facial mimics) after unilateral facial paresis.Ultimately we envision technology that measures electric activity of facial muscles from the intact side of the face and routes this measured signal via small scale computing device to the affected side to stimulate the dysfunctional muscles. This represents a new type of user interface technology where an individual will be able to use his or her own bodily behavior to simultaneously control the behavior of other parts of the body. This means basically that a computer will be developed to act as a driver of human behavior. The project will be implemented by a multidisciplinary consortium involving expertise from computer science (especially man-machine interaction), psychology and psychophysiology of facial behavior, engineering, and medicine (specialists in clinical treatment of facial paresis).

DiagNOSE is a joint research project between two research groups from University of Tampere (ESC and School of Medicine), Tampere University of Technology, and three industrial partners (Environics, Bionavis, and Jolla).

DiagNOSE is a joint research project between two research groups from University of Tampere (ESC and School of Medicine), Tampere University of Technology, and three industrial partners (Environics, Bionavis, and Jolla).

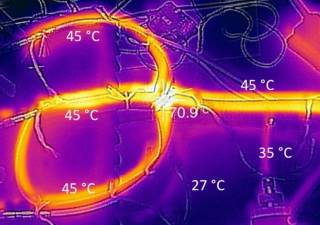

The project aims to develop artificial nose technology that can analyze chemical compounds related to cancer and the functioning of human emotion systems. The focus of ESC group is to study is it possible to detect human emotional processes by artificial nose technology. It has been found that chemosensory signals exuded during emotional reactions can affect other people’s behavior and psychological state but no one has so far analyzed emotions by artificial nose.

Funded by Tekes

The project aims to create methods and operations models on how to connect customers’ emotions with strategic business planning. The main focus is to create and study customers’ emotional pathway alongside the customer journey. Emotional pathway refers to the emotions that arise during different encounters of customer journey. Emotional pathway is studied by combining qualitative methods with psychophysiological experimental research. The achieved results will be concretized for companies so that they can use them in business development.

The project aims to create methods and operations models on how to connect customers’ emotions with strategic business planning. The main focus is to create and study customers’ emotional pathway alongside the customer journey. Emotional pathway refers to the emotions that arise during different encounters of customer journey. Emotional pathway is studied by combining qualitative methods with psychophysiological experimental research. The achieved results will be concretized for companies so that they can use them in business development.

Make My Day is a joint project with ESC and CIRCMI and it is funded by Tekes. Industrial partners of the project are Nokian Tyres, Särkänniemi Adventure Park, insurance company Keskinäinen Vakuutusyhtiö Turva, and veterinary clinic Kuninkaantien eläinklinikka.

Funded by Tekes.

In the HapticAuto project we will carry out basic research on multimodal interaction and apply the results in a new domain, automotive systems. New haptic actuator solutions and haptic feedback technologies will also be studied in collaboration with a research group from Stanford University and related industrial partners.

In the HapticAuto project we will carry out basic research on multimodal interaction and apply the results in a new domain, automotive systems. New haptic actuator solutions and haptic feedback technologies will also be studied in collaboration with a research group from Stanford University and related industrial partners.

Funded by Tekes.

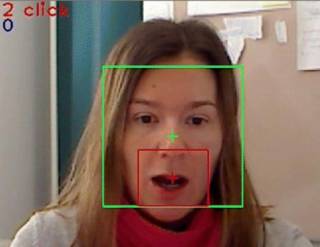

The project aims at developing and expanding research knowledge on new ways of utilizing automatic face analysis in social-emotional human-technology interaction (HTI) and building new support for vision-based user interfaces. The main objective of the project is to design and implement a new type of user interface that incorporates different machine vision techniques for the purpose of analyzing global facial information received from the user. Global facial information combines all detectable visual cues from the user’s face and head as, for example, facial expressions, eye movements, gaze direction, head movement, and head orientation. The applicability and usability of the developed interface will be validated by performing pilots in the target application areas which are technology-mediated social-emotional communications, networking, simulations, and gaming.

The project aims at developing and expanding research knowledge on new ways of utilizing automatic face analysis in social-emotional human-technology interaction (HTI) and building new support for vision-based user interfaces. The main objective of the project is to design and implement a new type of user interface that incorporates different machine vision techniques for the purpose of analyzing global facial information received from the user. Global facial information combines all detectable visual cues from the user’s face and head as, for example, facial expressions, eye movements, gaze direction, head movement, and head orientation. The applicability and usability of the developed interface will be validated by performing pilots in the target application areas which are technology-mediated social-emotional communications, networking, simulations, and gaming.

The research in the project is carried out by the consortium called “Machine Vision Interaction Consortium (MAVIC)”. The consortium consists of Finnish and Russian research teams. Finnish team includes researchers from ESC and MMIG groups. Russian team is located at the Laboratory of Neuroinformatics of Sensory and Motor Systems (NISMS), A.B. Kogan Research Institute for Neurocybernetics, Southern Federal University, Russian Federation. GoFASE project is funded jointly by the Academy of Finland and Russian Foundation for Humanities.

Starting from 2008 ESC group has collaborated and co-created technological research with Nokia Research Center, currently Nokia Technologies/Labs in a subcontracted fashion. This work continued through 2015 with Nokia Technologies.

Starting from 2008 ESC group has collaborated and co-created technological research with Nokia Research Center, currently Nokia Technologies/Labs in a subcontracted fashion. This work continued through 2015 with Nokia Technologies.

Starting from 2007 we have developed decisively joint teaching and research collaboration between Tampere Unit for Computer-Human Interaction, University of Tampere (UTA) and Human Centered Technology Unit, Technical University of Tampere (TUT). In the lead of Prof. Surakka several grants have been succesfully applied for the work that has resulted into an official joint curriculum “Master’s Degree Programme in Human-Technology Interaction” between the universities.

Funded by University of Tampere, Tampere University of Technology, and The Federation of Finnish Technology Industries.

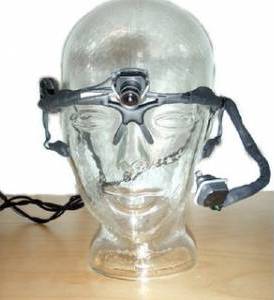

In the Face Interface project we aim to develop wireless, non-invasive technologies for measuring gaze direction and facial activity in different human-computer interaction tasks, for example, typing and Web browsing.

In the Face Interface project we aim to develop wireless, non-invasive technologies for measuring gaze direction and facial activity in different human-computer interaction tasks, for example, typing and Web browsing.

The developed new type of a user interface utilizes electric and video-based signals of facial muscles and eyes, and novel light weight wireless electrode technology. The system enables new type of studies on human-computer interaction utilizing facial activity normally used for communicating facial expressions and emotions in human-human interaction.

Face Interface is a project of the Wireless User Interfaces consortium. Wireless User Interfaces is a multi-disciplinary research consortium based in Tampere, Finland. The consortium consists of four research groups from the University of Tampere and the Tampere University of Technology. Our agenda is to develop wireless, wearable, and non-invasive technology for measuring human behaviour, especially physiological signals.

Funded by Academy of Finland.

Mobile Haptics is a joint research project between University of Tampere, Finland and Stanford University, USA. The project is focused on the research of haptic feedback in unimodal and multimodal user interfaces. The research is mainly empirical basic research, where novel device and software prototypes from constructive research are studied. This is supported by user-centered research where the empirical research results are applied in implementing user interfaces for special user groups. This research is also strategic and groundbreaking by its nature, as these interfaces have not previously been developed and tested so thoroughly. Our aim is to maintain active and continuous research collaboration between the universities and companies in the consortium.

Mobile Haptics is a joint research project between University of Tampere, Finland and Stanford University, USA. The project is focused on the research of haptic feedback in unimodal and multimodal user interfaces. The research is mainly empirical basic research, where novel device and software prototypes from constructive research are studied. This is supported by user-centered research where the empirical research results are applied in implementing user interfaces for special user groups. This research is also strategic and groundbreaking by its nature, as these interfaces have not previously been developed and tested so thoroughly. Our aim is to maintain active and continuous research collaboration between the universities and companies in the consortium.

Funded by Tekes.